As promised I would post an article about the road ahead, grouped per product.

However, it is based on my personal interpretation. Since I am only human, I am prone to error. Nor do I pretend to ‘Know-it-all’ nor to possess the ability to foresee the future. Nor does this posting present Microsoft’s view in any way. It is just me thinking out loud.

Having said all that, here it comes.

Overall impression

Microsoft is seriously taking care of it’s business. The speed in the development of new products or updates to its software portfolio is most impressive. And yet, speed is not the only keyword here. It happens in conjunction with quality, customer feedback and trends. So Microsoft isn’t just developing software, but based on a vision, input from their customers and trend analysis. Also, to put it more bluntly, they ‘put their money where their mouth is’. Microsoft doesn’t only talk about Clouds but invest heavily in it. How else does one describe the deployment of 10.000 servers per month by Microsoft? So the Cloud is coming. It is going to happen. The dynamics/vibes at Tech-Ed were evident. Much is going on and happening or about to. So no sleepy company, but one that is awake, alive and kicking! (in a decent manner that is.)

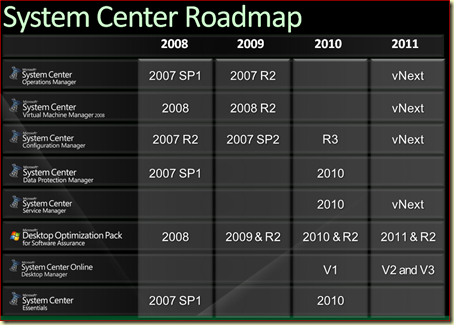

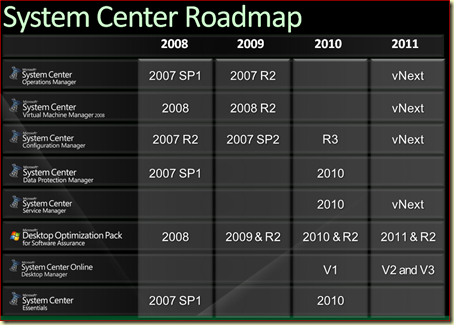

Roadmap SC Products

Since one picture says more than a thousand words just take a look at this:

(All the people working on the SC product line have their work cut out for the months/years to come….)

More detailed information per product:

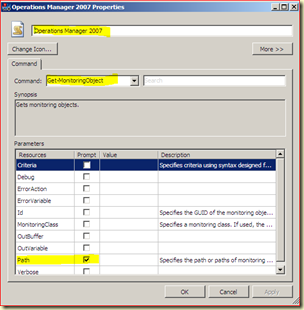

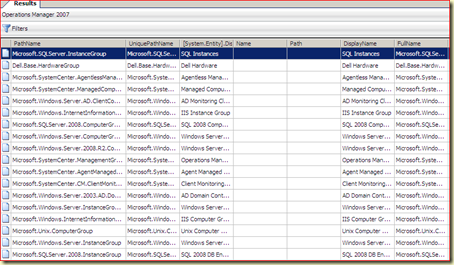

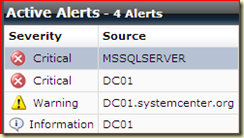

SCOM/OpsMgr

With the recent release of R2, SCOM/OpsMgr has grown up to a level where it can compete with IBM TEC and HP OVO. On top of it, Microsoft has stepped up the development and publishing of MPs. For instance, the MP for Exchange 2010 came almost available in the same week when Exchange 2010 itself became RTM. The MPs for Windows 7 and Windows Server 2008 R2 also came out on a fast schedule. Besides that the overall quality of the MPs has been improved as well. The guides – a very important part of every MP – are also grown up. The days where a MP covered a mere 10 pages are gone.

Personally I think that the year 2010 no really big changes are going to be seen for SCOM. I do not expect a R3 release. Perhaps a SP or a Roll Up Package, but no major release. Everything which could be put into a R3 release for instance will be found back in vNext. Reason is that SCOM has made very serious progress compared to other SC products like SCCM. However in 2011 the newest version of SCOM will be released, vNext. This newest version will at least just as revolutionary as the move from MOM 2005 to SCOM 2007 RTM.

Why?

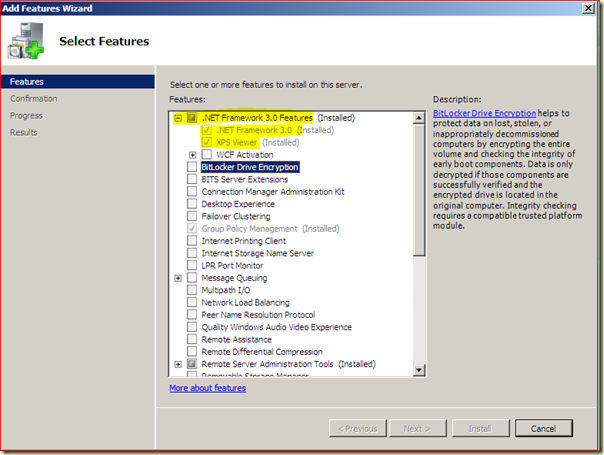

UI

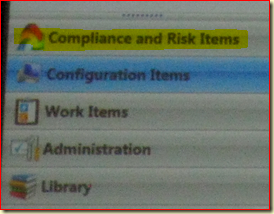

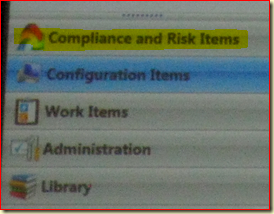

First of all, the UI. Even in SCOM R2 it is a bit locked. There are no ways to add an additional Wunder Bar (like Administration/Authoring/Reporting) and the like. When looking at the demo’s given with SCSM or SCCM vNext, these SC products used the new UI which is much more extensible by default. Here third parties can create their own Wunderbar and add more functionality. So SCOM vNext will become much much more a framework where all kind of extensions are possible. Travis Wright, Lead Program Manager for SCSM, has provided me with a link about how to create your own Wunderbars, to be found here. Thanks Travis!

(Wunder Bars of SCSM Pre Beta, with an additional Wunder Bar ‘Compliance and Risk Items’, added by importing a MP developed by Microsoft.)

With MPs for that version of SCOM, a vendor has much more the ability to extend SCOM. Thus making SCOM a more flexible product then it already is.

Monitoring Network Devices

For what I have heard during the sessions, SCOM vNext will have out-of-the-box much more ability to monitor network devices.

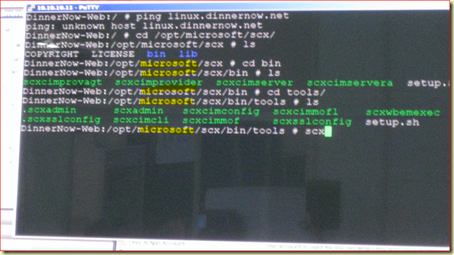

Cross Platform Functionality

The way SCOM monitors non-Microsoft platforms is based on two pillars: *NIX Agent and the related MP. This way SCOM doesn’t have to be partially rewritten for supporting additional *NIX distributions.

Data Warehouse

With SCSM vNext there is a big change that this product will contain the Data Warehouse for other SC products as well. So the Data Warehouse of SCOM is likely to move to SCSM. Whether this means the SCOM vNext related reports are only to be found in SCSM or SCOM as well, that I do not know.

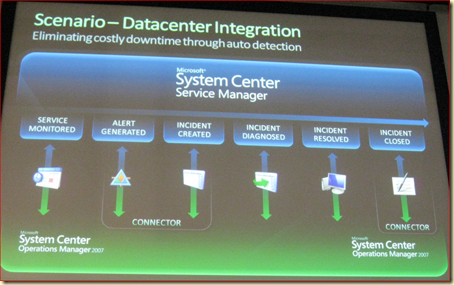

Umbrella SCSM and SLAs

For all what I have seen and heard during the sessions, SCSM will become the umbrella for the other SC products. So SCOM vNext will be integrated into SCSM to a great extend, thus making the whole workflow of IT Management Processes more accessible and manageable as well. Monitoring of SLAs will be enhanced greatly since SCSM is going to be THE tool of all SC products for workflow management/automation. So SCSM will contain much more (background) information about the SLAs then SCOM ever will.

SCCM

With SP2 for SCCM just being out for a couple of weeks, this product is growing up fast. Why?

R3

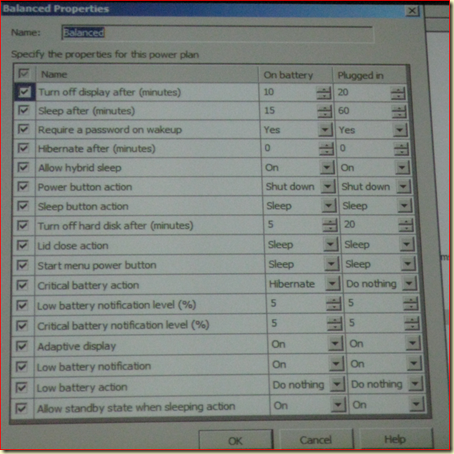

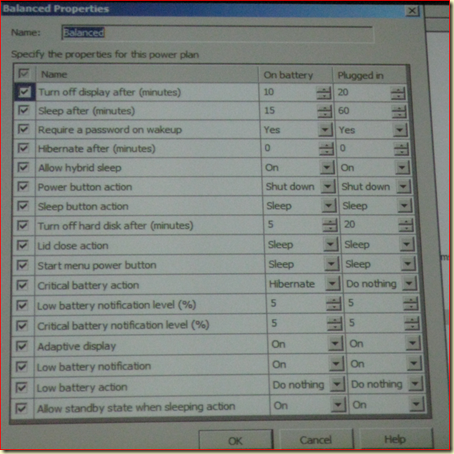

Yes. That’s right. R3 will come out in the first half of 2010. Besides many improvements to features already present, it will contain a new Client as well: the Power Management Client.

This will allow for centralized Power Management of the clients. Microsoft is going Green! With this functionality reports will be added as well:

Also support for Nokia E-series mobiles will be added as well in this release. Customers with an SA are entitled to R3.

vNext

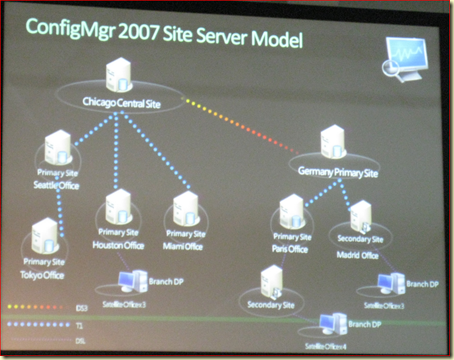

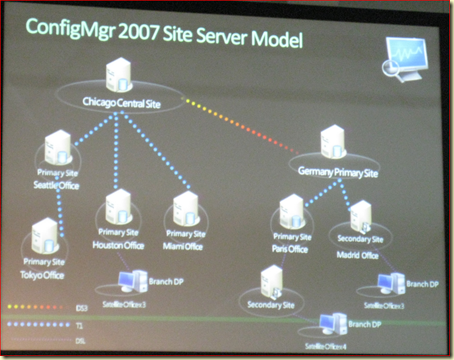

In 2011 SCCM vNext will be released. Here the changes are really huge. For instance the hierarchy will be flattened out. Check it out yourself:

(SCCM today)

(SCCM vNext)

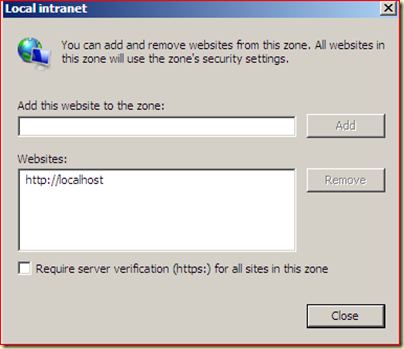

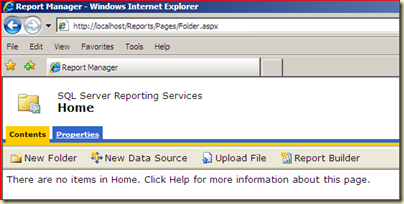

Like I mentioned it before, the UI will be the new one, the MMC is dropped. This UI will be extensible as well. Besides tons of changes it will also contain Mobile Device Manager (MDM) 2008 SP1. From 2010 and later MDM won’t be available anymore as a separate product. Some other changes are:

- SQL Reporting Services from SQL 2008 SP1 is being used

- Self Healing capabilities of the clients

- Peer-to-peer distribution

- Web based portal for the users

- In-Console Alerts

- New security model: Security Role AND Security Scope based

- Support for Anti-virus signature. Fully automated updated of AV updates is supported!

- Desired Configuration Management (DCM) WITH auto remediation for non-compliant registry-, wmi and script-based settings

Umbrella SCSM

With SCCM there will be a tight integration with SCSM as well. For instance when DCM find one or multiple clients to be drifting it will be found back in SCSM as well. That process can be automated even further with auto-ticket creation AND assignment as well. This is just a small example of all the possibilities.

Recap SCCM vNext

In short SCCM vNext will be all about User Centralized Management (targeted at the user) where as today’s versions are focused on System Centric Management (targeted at the device).

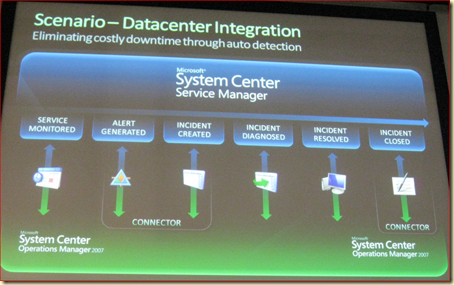

System Center Service Manager (SCSM)

In the first half of 2010 this new product will go RTM. SCSM will be more than ‘JATS’ (Just-Another-Ticketing-System). As Microsoft described it during one of the sessions I attended about SCSM, it will be an ‘workflow automation platform’. So all IT processes as defined in ITIL/MOF can be defined here. Also many connections will be made to SCCM and SCOM where SCSM will become the umbrella of all SC Products. So SCSM will contain these knowledge's (and the related workflows and forms) by default:

- Incident Management

- Change Management

- Knowledge Management

Also SCSM will be extensible to a huge extend. So companies can add their own Knowledge, Workflows and Forms. So SCSM will become a framework which can modified to the organizational needs. For this MPs are to be found here as well, using Classes, Rules and Workflows. Haven’t we seen that somewhere else as well? :)

SLA monitoring

Here, with the data flows coming in from SCCM and SCOM, very detailed and enhanced SLA Monitoring, forecasting and trending is a reality. Finally the IT Management really knows what is going on.

Umbrella SCSM

As stated earlier, SCSM will become the umbrella where all other SC products come together. In the SCSM version to be expected this integration will be strong. In the vNext edition it will even go further.

vNext

In 2011 SCSM vNext will be released. For what I heard the integration with the other SC products will go further. For instance, the Data Ware House functionality for SCOM is likely to be hosted by SCSM.

Recap SCSM (2010 and vNext)

With SCSM Microsoft will release the umbrella for many SC products. This will become the starting point for the IT Management in order to see how their IT environment is doing on multiple levels. The reporting capabilities and workflows in combination with the incoming data flows from SCCM and SCOM real SLA measuring is a possibility. So SCSM is not JATS but way much more. With the initial release in 2010 and the vNext version in 20111 where the connectivity with other SC products becomes even more stronger, a real powerhouse is about to be born. With the thrust, energy and resources Microsoft puts into it, it will certainly become a success.

DPM

I am not that specialized in DPM. All I can tell is that in the first half of 2010 the newest version of DPM will be released, DPM 2010. As far as I know, no updated version in 2011 will be released. What DPM 2010 does compared to the current edition? To be frankly, I do not know exactly.

A respected colleague of mine, Matthijs Vreeken, is however an expert on that topic. So I will refrain myself from saying anything about DPM (2010) since it would be out of my league. Just keep a close eye on his blog, to be found here since he can tell way much more about it.

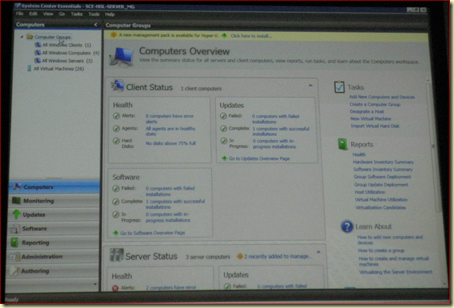

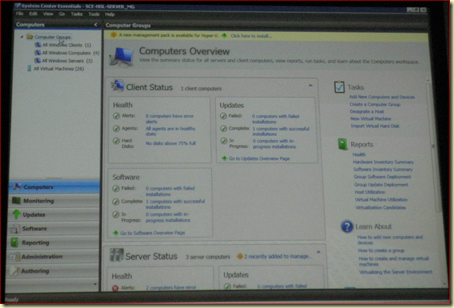

SCE

This product will get a huge overhaul. In 2010 the newest version will be released, SCE 2010 (the names aren’t that much fancy. No names like SCE Fireball or SCE Dragon… :) something which I am glad about!). In 2011 there will be no vNext release. This is to be expected since SCE is a product on its own, targeted at a certain market, without any real connections to other SC products.

SCE 2010 will have these SC capabilities/functionalities:

- Hyper-V Management

- SCOM functionality

- SCCM functionality

Since I have only seen some small demo’s if it, I can’t tell anything more.