Issue

As a Best Practice in SCCM 2012x, you use dedicated Device Collections for patching. This way it’s far more easier to gain (and keep!!!) granular control over patch management as a whole. And ALSO as a Best Practice, these dedicated patch Device Collections are placed in a dedicated folder with a sound naming scheme being used.

And in that folder there will be other folders as well in order to differentiate between patch Device Collections for clients and servers. So finally you’ve got something like this:

But in order to stay on top of things, it’s a hard requirement to KNOW exactly which servers are contained by what patch Device Collection. You don’t want that special server which doesn’t allow for an automatic reboot to end up in the patch Device Collection which does just that since it’s targeted by the ADR allowing automatic reboots… Ouch!

Also for the process side of things, you need to have this information so you can use that information in your service manager system like SCSM for instance.

Of course, you can open each patch Device Collection, copy it’s members and paste it into an Excel sheet. But that’s so time consuming and so 1980’s!

The PowerShell challenge & a ‘pat’ on the back of Coretech

But hey! We’ve got PowerShell these days! And SCCM 2012x is PowerShell driven! So why not write a PS script which does just that.

And while we’re at it, this PS script must enumerate the correct patch Device Collections (these patch specific Device Collections come and go, as required) and create per patch Device Collection a separate CSV file, having the name of the related patch Device Collection.

On itself nothing difficult to do with PowerShell. However, as it turned out, it was a challenge to enumerate the correct folder in the SCCM 2012x Console since there isn’t a default PS cmdlet for it. Gladly the community came to the rescue. I found different resources about how to achieve just that.

For me however, one blog stood out since it provided a ‘fix’ for it with just a few lines of PS code. Awesome! Yes, you need to obtain the FolderID by using a free tool (Coretech WMI and PowerShell Explorer tool). But when you’ve got that FolderID you’re in good shape.

Credits

Therefore a BIG word of thanks to Coretech since they provided me with the correct information, PowerShell code and previously mentioned tool, all for free, like the true community spirit. So all credits go to them since without this posting written by them, this PS script couldn’t be made and kept so simple.

How the PS script works

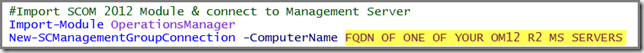

So now it’s time for the PS script. it does a couple of things which I’ll explain here.

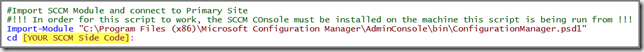

First and foremost, it’s best to run this PS script from a SCCM server.

And – again - YOU need to run the Coretech WMI and PowerShell Explorer tool FIRST in order to obtain the correct FolderID which you’re going to use in this PS script.

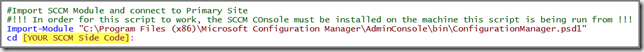

Replace [YOUR SCCM Side Code] with your side code:

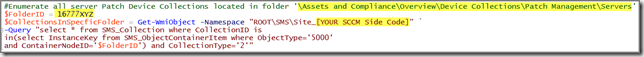

Replace the folder in the comment with the correct folder.

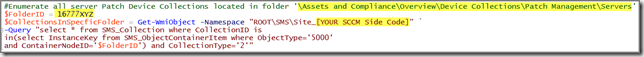

Replace the entry 16777XYZ after $FolderID with the correct one you’ve found with the Coretech WMI and PowerShell Explorer tool

Replace [YOUR SCCM Side Code] with your side code after -Namespace "ROOT\SMS\Site_

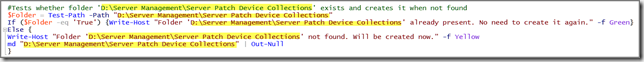

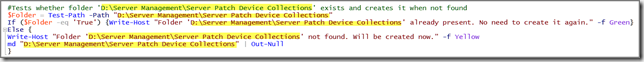

Create the folder D:\Server Management\Server Patch Device Collections on the system where you’re going to run this PS script from. Of course you’re free to use the C:\ drive as well  .

.

This part of the PS script checks whether that folder is already present. When not found it will create it for you. Of course you can modify this part of the PS script as required.

Now a check is performed in order to see whether old CSV files exist in the folder D:\Server Management\Server Patch Device Collections:

When CSV files are found, the user will be prompted whether or not these CSV files may be overwritten by new ones:

When the user is okay with overwriting the old CSV files the script will enumerate all patch (server) Device Collections, get it’s members and pipe them into a CSV file, one per Device Collection. Each CSV file will be named as their respective (server) patch Device Collection:

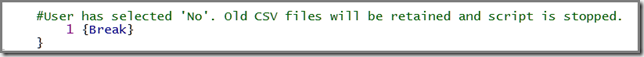

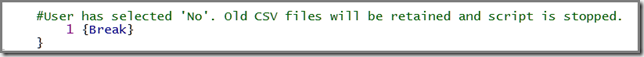

The user has selected not to overwrite the existing CSV files. Further script execution is stopped:

There aren’t old CSV files found. The script will execute as intended and let the user know when finished. The user will also be reported where the newly created CSV files are to be found:

The PS script

You can find the PS script here.

Remarks

Of course, you can make this script more fancier. Like asking the user where to place the CSV files and use the answer as a parameter in the script.

Or when old CSV files are found, they can be moved to another folder and let the script continue.

None the less, this script provides the basics with some additional checking (folder/CSV files). And the rest of it, I leave it up to you, the community.

So feel free to make it more ‘posh’ and send me back the results. When I am impressed I’ll share it on my blog with referring to you.

And last but not least: Without Coretech this script wouldn’t have been so easy to make.

![]() . So – again – feel free to reach out and add your sources as well.

. So – again – feel free to reach out and add your sources as well.